Texts, images, and now even videos, music, or podcasts are increasingly being created wholly or partially with AI.

And not always with good intentions. Deepfakes, for example, can cause serious political or personal harm.

Accordingly, a labeling requirement for AI content has been discussed in politics and business for some time.

But what's the current status? Is there already a labeling requirement? And if so, from when and exactly how must AI content be labeled?

In this article, you'll learn everything you need to know about this. I'll cover all three levels (national, EU-wide, and technology companies) that could require labeling by end users or technology corporations.

- The EU AI Act introduces a labeling requirement for AI-generated content from May 2025, with violations costing up to €15 million or 3% of annual revenue

- National laws don't exist yet in most countries, but platforms like YouTube and Meta have already introduced their own labeling requirements

- Labeling must be "clear and conspicuous" – for deepfakes and publicly relevant topics, there is an absolute labeling requirement

1. National Labeling Requirements

In most countries, there are currently no national guidelines or laws requiring the labeling of AI-generated content.

A labeling requirement has been discussed for some time, however. In Germany, for example, the Left Party submitted a motion in mid-June 2023 in the Bundestag demanding a labeling requirement when using AI systems to generate content "that is made publicly accessible or is not purely private in nature." However, this motion was rejected in November 2023.

The Federal Commissioner for Data Protection (BfDI) also emphasizes in a statement the importance of labeling AI content as a protective mechanism for consumers, particularly in the context of propaganda and defamatory content.

Additionally:

Existing regulations on consumer protection, personal rights, or competition law could potentially still apply.

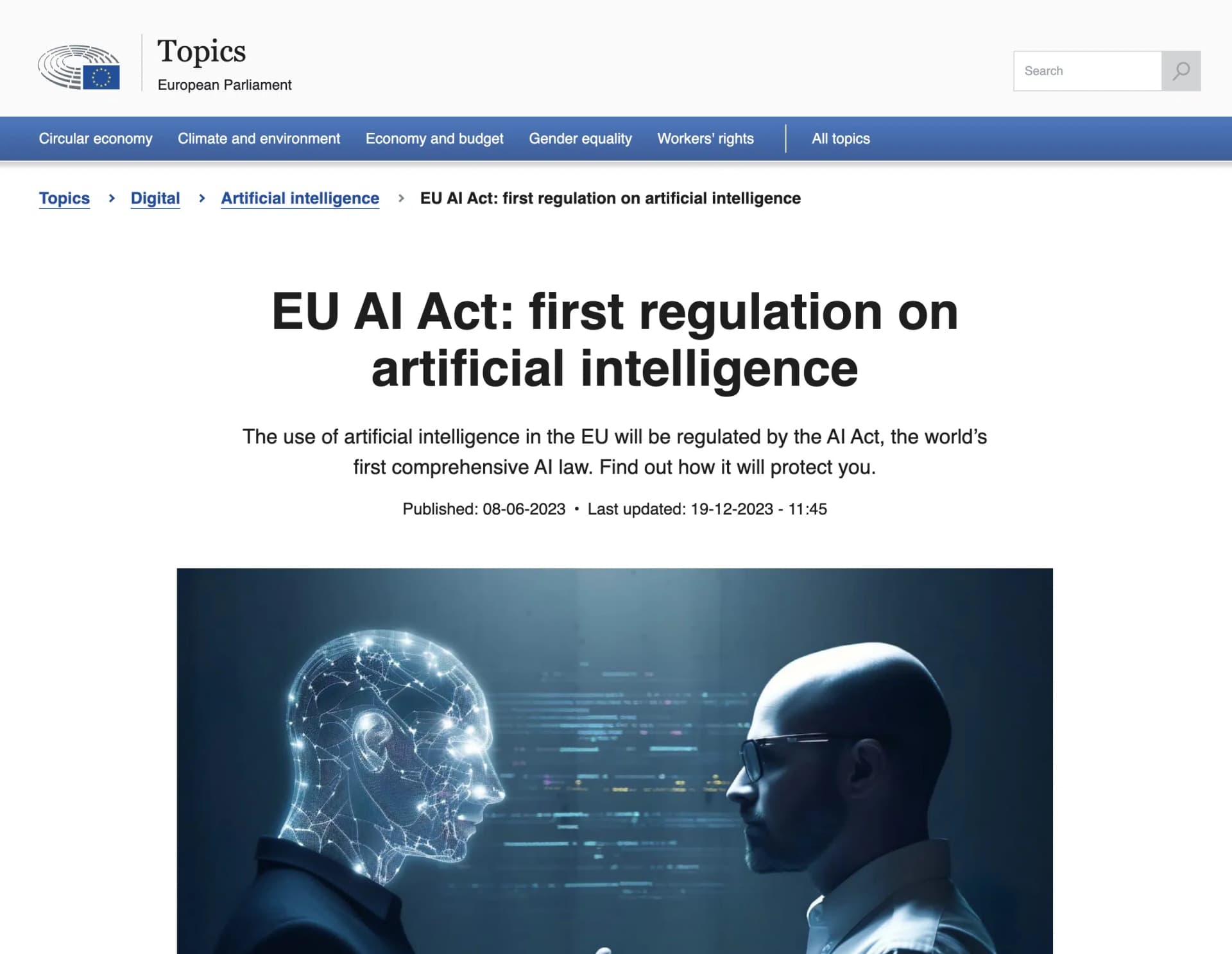

2. EU AI Act

The EU launched the Artificial Intelligence Act in March 2024, a directive to legally establish EU-wide regulations for AI systems.

In Article 50, it also provides transparency obligations for providers and users of certain AI systems and tools:

- Providers of AI tools that generate audio, image, video, or text content must ensure that tool outputs are labeled in a machine-readable format as artificially generated or manipulated.

- Users of AI image generators, AI voice generators, AI music generators, or AI video generators must disclose when they publish deepfakes (deceptively realistic AI-generated image, audio, or video content of people, places, or events).

- Users must also label AI texts on topics of public interest as artificially generated. Exception: The text has been reviewed and editorially processed by humans.

In Recitals 132-136, the necessity of labeling AI-generated content is explained in more detail.

It emphasizes that users must be able to recognize AI-generated content, which is increasingly difficult to distinguish from human-made content. The labeling requirement is intended to counter the risk of misinformation and manipulation.

Recital 134 clarifies that the labeling requirement for deepfakes should not impair freedom of expression and artistic freedom. For clearly artistic, satirical, or fictional works, only appropriate disclosure of AI use is required without affecting the work itself.

Before the labeling requirement becomes binding, however, the AI regulation must still be finally adopted by the European Council and then transposed into national law. The former is expected in the coming months, the latter will likely happen in 2026:

2.1 When Does the EU AI Act Come Into Effect?

The timeline for the EU AI Act taking effect can be summarized as follows:

- On March 13, 2024, the European Parliament approved the AI Act, formally adopting it.

- On May 21, 2024, the EU Council approved the law. Details can be found in the press release.

- 20 days after publication in the Official Journal of the EU, the regulation enters into force.

- Most provisions of the AI Act will then apply 24 months after entry into force, so presumably from mid-2026.

However, some provisions have shorter transition periods:

- Bans on particularly risky AI systems apply after just 6 months

- Rules for generative AI apply after 12 months

- Obligations for "embedded AI systems" only after 36 months

This means the EU AI Act will likely enter into force in late April or May 2024, but most regulations won't apply until mid-2026. Some particularly important provisions, such as bans on high-risk systems, will take effect much earlier.

2.2 How Must AI Content Be Labeled According to the EU AI Act?

The EU AI Act contains no specific requirements for exactly how AI-generated content must be labeled.

The AI Act merely prescribes that AI-generated content such as texts, images, audio, and video must be labeled "clearly" as such. Users should be able to clearly recognize when content has been machine-generated.

Particularly in marketing activities using AI content, it must be clearly disclosed that these are AI creations.

When publishing AI-generated texts intended to inform the public about important topics, it must also be made transparent that the texts were artificially created.

However, how this transparency and labeling is to be specifically implemented, e.g., through disclaimer texts, watermarks on images, notes in metadata, etc., is left open by the AI Act.

The exact design of the labeling requirement will likely be left again to providers and users of AI systems, meaning legal clarity will only be achieved through numerous court rulings (similar to GDPR).

2.3 What Are the Consequences for Violating the Labeling Requirement?

Companies that violate the labeling requirement for AI-generated content under the planned EU AI Act could face the following consequences:

1. High Fines

For violations of obligations under the AI Act, such as the labeling requirement, fines of up to €15 million or 3% of global annual turnover can be imposed, whichever is higher.

However, lower caps are expected to apply to small and medium-sized enterprises as well as startups.

2. Ban on AI System

Providers may be forced to remove their AI systems from the market if they repeatedly or seriously violate the regulations.

3. Competition Law Consequences

In addition to fines under the EU AI Act, competition law consequences may also be threatened, e.g., cease and desist letters.

3. Labeling on Major Online Platforms

Some major online platforms and social networks have already voluntarily and before the introduction of the EU AI Act introduced a labeling requirement for creators and/or automatically label AI content. Here's a brief overview:

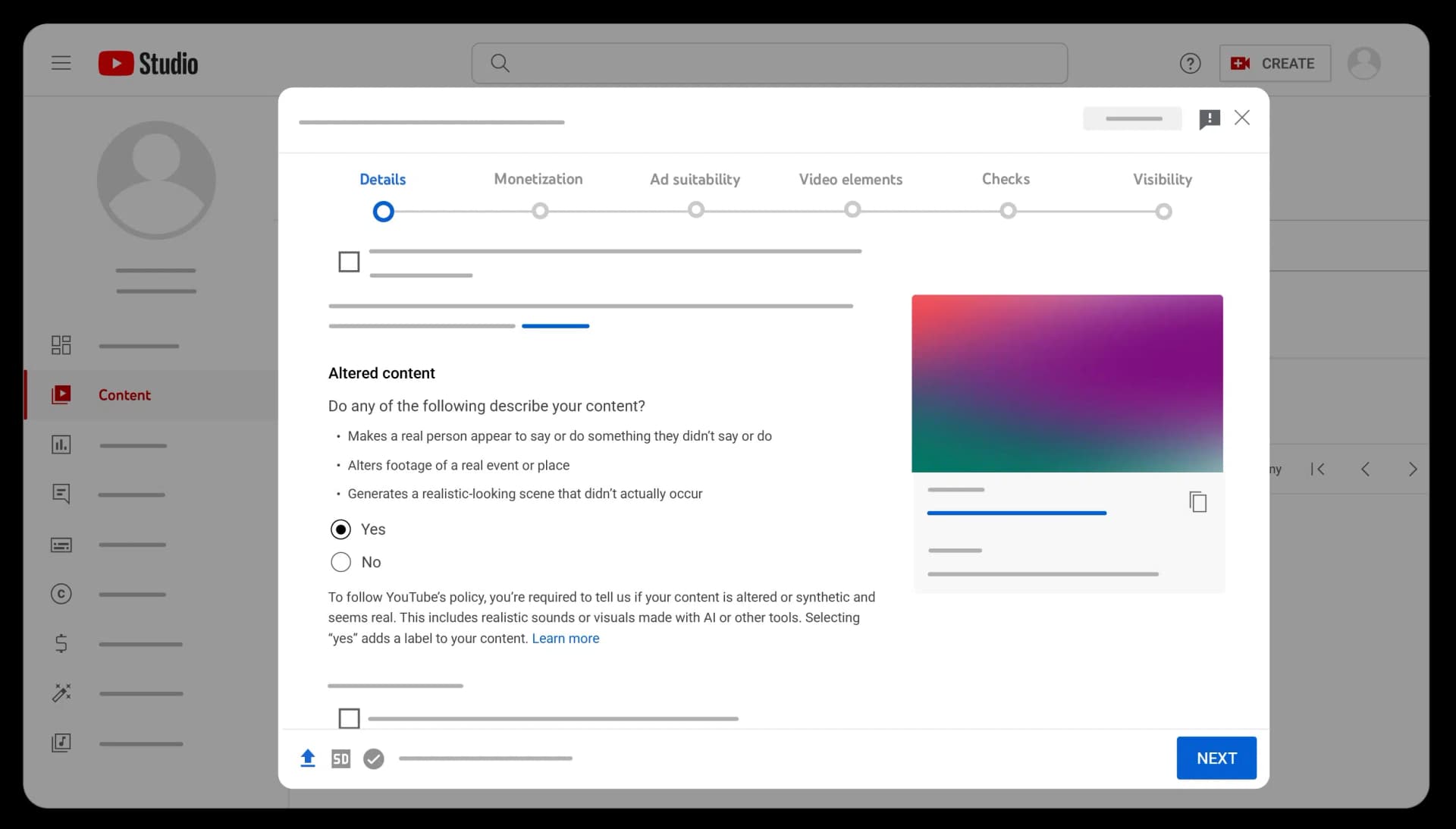

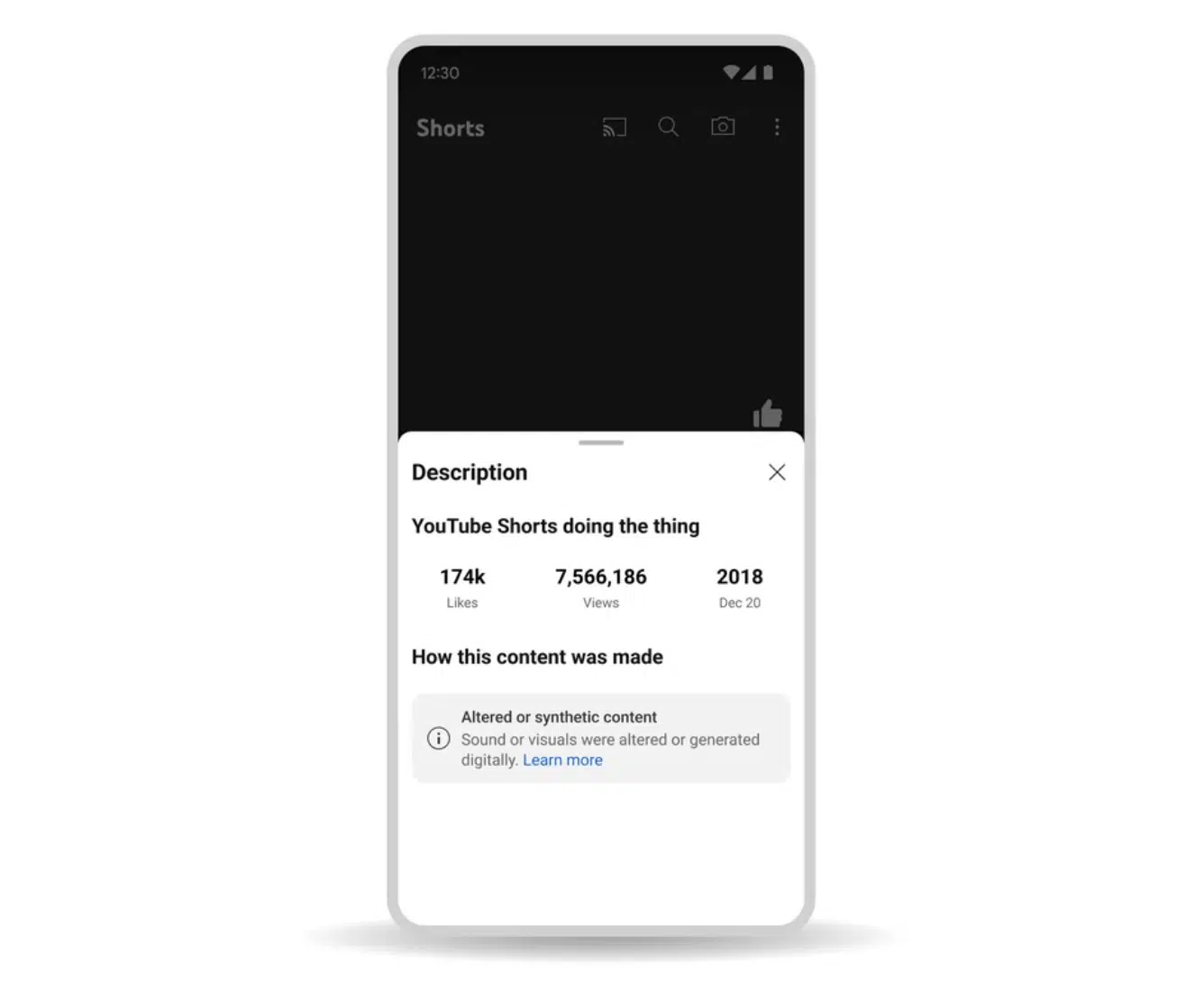

3.1 YouTube

Since November 2023, creators must indicate when uploading YouTube videos whether they contain AI-generated content that could appear real.

The labeling is done via an option in YouTube Studio called "Altered content":

The labeling is displayed in the video description:

For sensitive topics like news or elections, YouTube displays the notice directly in the video:

Violations can according to YouTube Help lead to removal of videos, exclusion from the Partner Program, or result in a permanent label being displayed in videos that cannot be removed by creators.

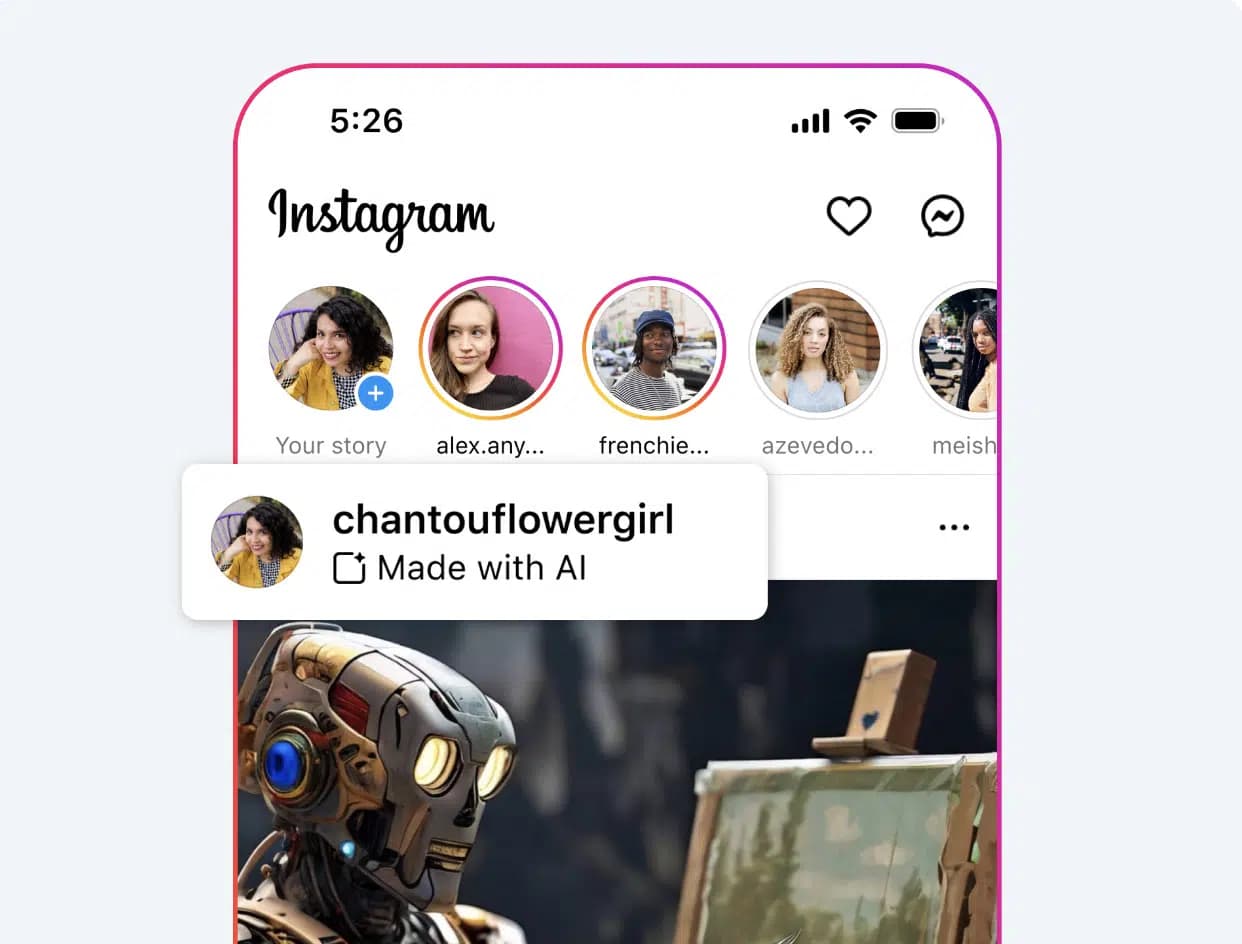

3.2 Meta (Facebook, Instagram, Threads)

Meta has announced that from May 2024, it will label a broader range of AI-generated content such as videos, audios, and images with the "Made with AI" label:

These changes are based on recommendations from the Oversight Board as well as extensive consultations with experts and public opinion surveys.

The goal is to create more transparency and context for users, rather than simply removing such content and potentially restricting freedom of expression.

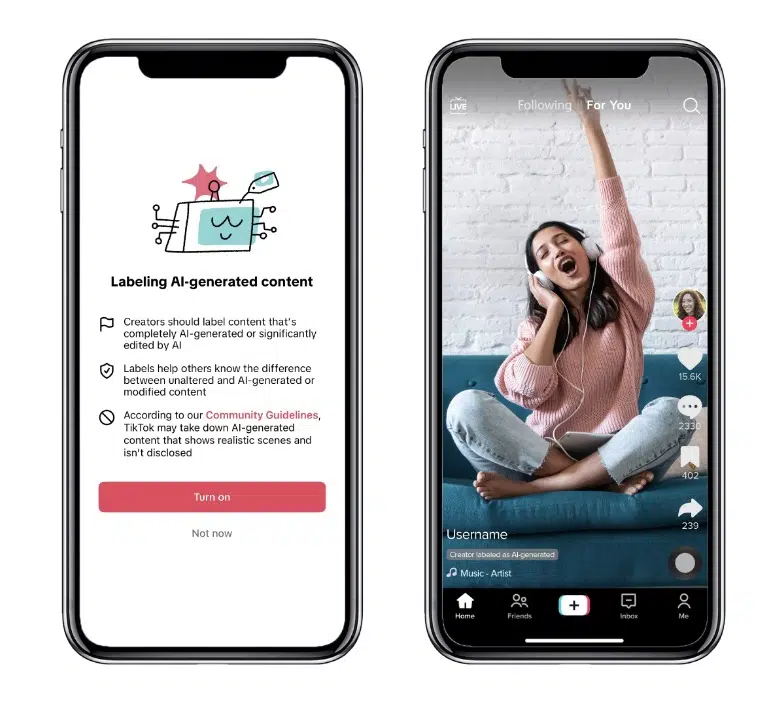

3.3 TikTok

TikTok introduced in September 2023 a new tool that allows creators to label their AI-generated content:

The goal is to create transparency and inform TikTok users when content has been created or edited with AI.

According to the press release, the company is also testing automatic labeling for AI-generated content and working with experts to develop effective labeling guidelines and implement industry best practices for transparency and responsible innovation in AI.

3.4 Twitter/X

X (formerly Twitter) currently has no specific recommendations, guidelines, or requirements for labeling AI-generated content.