Midjourney is an American tool where the entire user interface (including instructions and help) is in English. Many creatives in Germany also use the tool – so German prompts frequently appear in the community.

Nevertheless, you also see German prompts on Discord and in prompt collections from time to time (or prompts in other languages like Spanish, Italian, or Russian).

This quickly raises the question of whether you can simply enter German prompts in Midjourney and get similarly good results.

I tested it extensively for you!

I had Midjourney execute many different prompts. I tried to translate the prompts as accurately as possible and looked at what happens when you deviate slightly from the word choice.

To make the results as comparable as possible, I always used the same seed number (images with the same seed number are created similarly in composition, color, and detail).

- Basic German nouns work well: 'Pferd' (horse), 'Haus' (house), 'Sonne' (sun) deliver similar results to English equivalents

- German adjectives are problematic: 'weinende Frau' (crying woman) shows grapes, 'schwebendes Schloss' (floating castle) doesn't float - English prompts significantly more reliable

- For best results: Use English prompts, German only for culture-specific elements (e.g., 'Schloss' for Neuschwanstein-style castle)

1. Prompts Consisting Only of Nouns

Let's start with a very simple prompt consisting of just one word (a noun):

horse vs. Pferd

Prompt 1: horse –ar 3:4 –seed 9876789

Prompt 2: pferd –ar 3:4 –seed 9876789

Based on the images, you can see that similar training data seems to be stored for both words: one of the images is almost identical (the second image in the first set, the third image in the second set).

Single-word prompts are great for Midjourney – but only marginally useful for the user. So let's take a step further and try multiple words. To make it easy for the tool, we'll stick to nouns:

moon, sun, stars vs. Mond, Sonne, Sterne

Prompt 1: moon, sun, stars –ar 3:4 –seed 9876789

Prompt 2: mond, sonne, sterne –ar 3:4 –seed 9876789

In this image too, we can see clear similarities, and the conclusion is that there's similar training data for the words – in both languages.

I personally find that Set 1 contains more abstract details, which is why I like this set a bit better – to get to the bottom of this, I swapped out a single word and looked at these sets:

moon, sun, night vs. Mond, Sonne, Nacht

Prompt 1: moon, sun, night –ar 3:4 –seed 9876789

Prompt 2: mond, sonne, Nacht –ar 3:4 –seed 9876789

Here the differences become even clearer, and we no longer see particularly great similarities between the images. Nevertheless, all images contain elements from our prompt.

So the result is different, but both results match our prompt. What one prefers is a matter of taste, and in such cases it can be worthwhile to experiment with prompts in both languages to achieve the desired result.

2. More Complex Prompts

Prompts consisting exclusively of nouns usually generate cool images in Midjourney, but if you want something specific, they rarely lead to the desired result. So let's try what results Midjourney delivers when we enter more complex prompts:

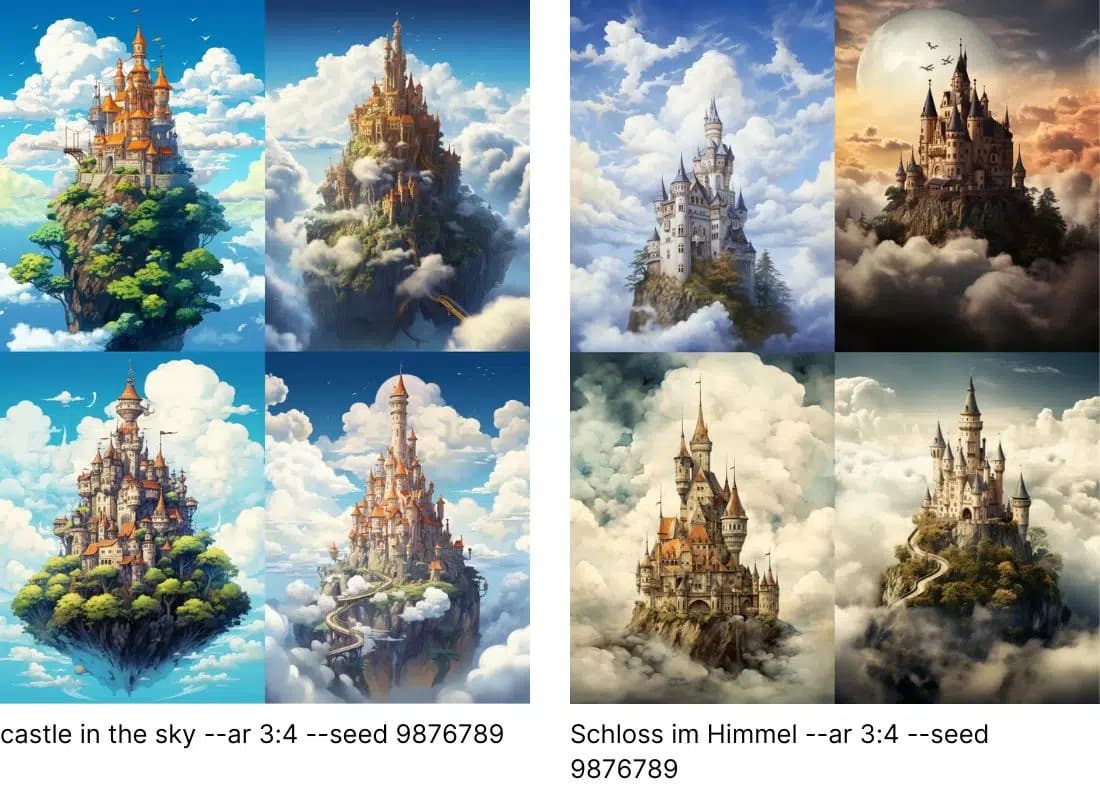

castle in the sky vs. Schloss im Himmel

Prompt 1: castle in the sky –ar 3:4 –seed 9876789

Prompt 2: Schloss im Himmel –ar 3:4 –seed 9876789

What we see here is exciting: although you might think both prompts should deliver the same result, we do see a difference: While the castle in the German prompt simply appears to be standing on a high rock, our English castle is flying in the air in two (possibly even three, as we don't see the end of the rock downward in the third) of the images.

The appearance of the castles is also somewhat different: the Castle always has red roofs, while the Schloss has blue roofs in 3 out of 4 images – this suggests the training data includes the famous Neuschwanstein Castle, which has blue roofs.

Of course, these are just excerpts that aren't representative (I don't mean to say that the term castle always generates only castles with red roofs). But it suggests that training data in different languages also have local references – which makes sense, as such features are naturally important for images in certain contexts.

But since I'd like an image of a floating castle in the sky, I adjusted the prompt again:

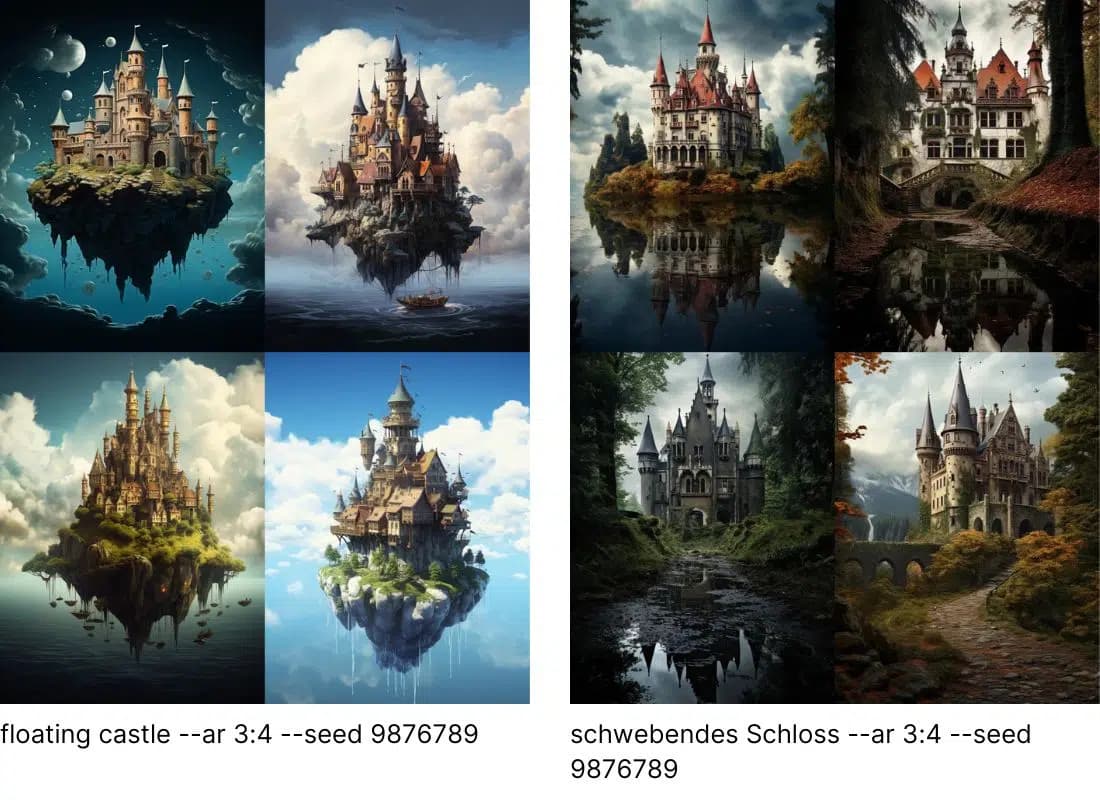

floating castle vs. schwebendes Schloss

Prompt 1: floating castle –ar 3:4 –seed 9876789

Prompt 2: schwebendes Schloss –ar 3:4 –seed 9876789

And yes, here you can see very clearly that nouns are fine, but adjectives present a different challenge. None of the German castles really float, but all four English castles do. It also doesn't deliver better results if you replace "schwebend" (floating) with "fliegend" (flying).

This is of course exciting and requires another test where we increase the difficulty and want to create a certain mood with the image:

burning woman, strong, dark, fierce vs. brennende Frau, stark, dunkel, erbittert

Prompt 1: burning woman, strong, dark, fierce –ar 3:4 –seed 9876789

Prompt 2: brennende Frau, stark, dunkel, erbittert –ar 3:4 –seed 9876789

Okay.

The German woman is visible, but you'll search for fire in vain. Two of the women have red hair – maybe they're supposed to symbolize fire? The mood is right, but the comparison to the English woman on fire is rather sobering. Because she is literally on fire!

Instead of adjectives, you can of course also work with nouns and rephrase it differently, let's see if that works:

woman on fire, strong, dark, fierce vs. frau in feuer, stark, dunkel, erbittert

Prompt 1: woman on fire, strong, dark, fierce –ar 3:4 –seed 9876789

Prompt 2: frau in feuer, stark, dunkel, erbittert –ar 3:4 –seed 9876789

That's already a lot better. In English, our woman on fire looks stronger and more combative in my opinion, but at least our German "Frau in Feuer" is burning this time.

I then tested how it affects things when you swap individual words in the German prompt:

frau aus feuer vs. frau in flammen

Prompt 1: frau aus feuer, stark, dunkel, erbittert –ar 3:4 –seed 9876789

Prompt 2: frau in flammen, stark, dunkel, erbittert –ar 3:4 –seed 9876789

And the result is very, very similar to the original prompt (Frau in Feuer).

So it seems that Midjourney has quite a lot of data for German nouns – but less for German adjectives. In English, nouns and adjectives often deliver similarly good results.

3. Adjectives in Prompts

I naturally tested this thesis a bit further:

yellow dog vs. gelber Hund

Prompt 1: yellow dog –ar 3:4 –seed 9876789

Prompt 2: gelber Hund –ar 3:4 –seed 9876789

The "gelber Hund" is very similar to the yellow dog – here too, similar or identical training data seems to underlie them. Colors are of course super important for images, so it's not surprising that a lot of training data was stored for colors (similar to nouns).

Let's now look at how emotional states work:

sad woman vs. traurige frau

Prompt 1: sad woman –ar 3:4 –seed 9876789

Prompt 2: traurige frau –ar 3:4 –seed 9876789

Here too, we have pretty similar results that all match the prompt well.

But I'd like a woman who's crying and where you can see tears, so let's try that:

crying woman vs. weinende Frau

Prompt 1: crying woman –ar 3:4 –seed 9876789

Prompt 2: weinende Frau –ar 3:4 –seed 9876789

The result is very exciting again, because while our English woman sheds very expressive tears, there's no trace of tears with the German woman – instead, all four images contain elements of wine (grapevine plants). Here Midjourney seems to confuse "weinende" (crying) with "Wein" (wine).

But now I want a crying woman and try to see if I can solve this differently with a German prompt:

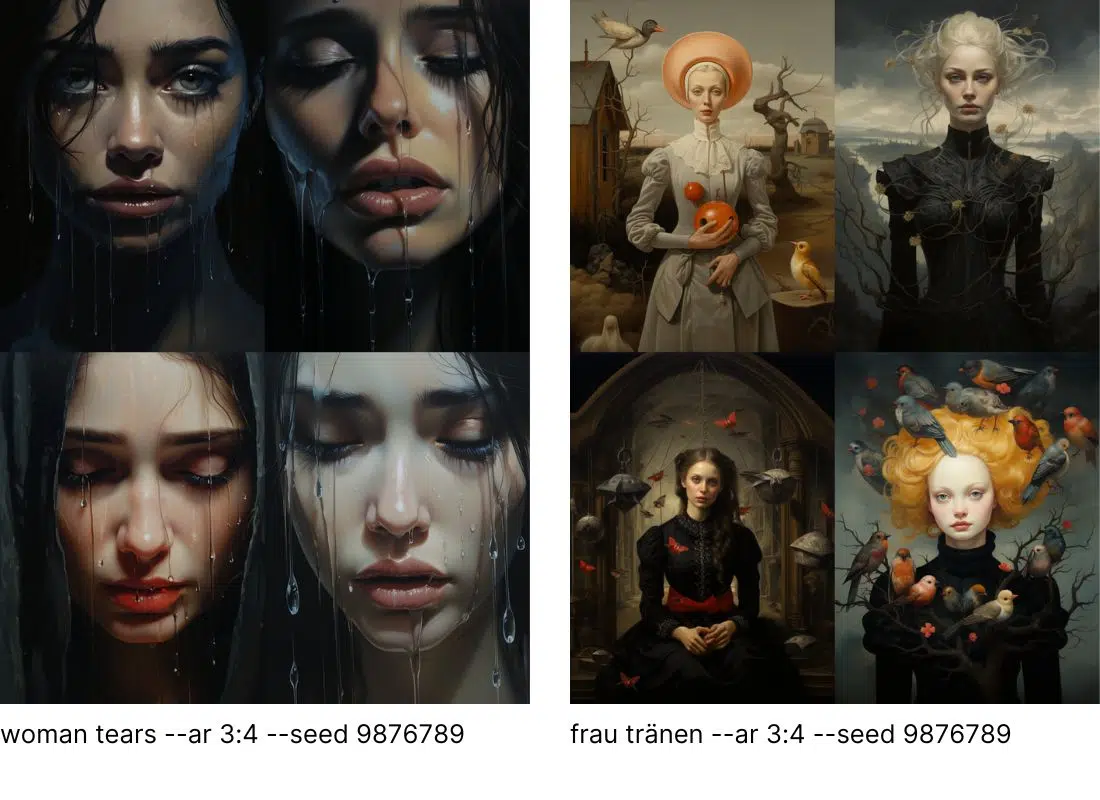

woman tears vs. frau tränen

Prompt 1: woman tears –ar 3:4 –seed 9876789

Prompt 2: frau tränen –ar 3:4 –seed 9876789

The English "woman tears" is extremely similar to "crying woman". The German "Frau Tränen", however, has no similarity to the "weinende Frau" – but I don't see tears here either. The images are dark and the mood is subdued, but somehow they don't quite hit what I expected.

Let's try if it looks different with other emotions:

happy woman vs. fröhliche Frau

Prompt 1: happy woman –ar 3:4 –seed 9876789

Prompt 2: fröhliche frau –ar 3:4 –seed 9876789

Um, yes. Some would say that captured me well with the German prompt – apparently I sometimes look very serious even when I'm happy. Generally, with such a prompt, you'd rather expect what Midjourney delivers with the English prompt.

Let's also see if the result improves when we adjust the prompt minimally:

freudige frau vs. frau lachen (/frau lacht)

Prompt 1: freudige frau –ar 3:4 –seed 9876789

Prompt 2: frau lachen –ar 3:4 –seed 9876789

The result is similarly sobering as with "weinende Frau / Frau mit Tränen". No matter what I enter, the woman apparently just doesn't want to laugh. Here it's much easier and faster to achieve success by entering an English prompt.

4. Food Photography Prompts

A probably very large application area for AI images is recipes: Food photography is elaborate, expensive, and takes a long time. How nice would it be to simply generate these images on the PC?

This is now reality, and the images are becoming extremely good (of course with some finesse and training). But also with German prompts?

I tested it for you, of course:

potatoes with salmon and dillweed vs. kartoffeln mit lachs und dill

Prompt 1: potatoes with salmon and dillweed –ar 3:4 –seed 9876789

Prompt 2: kartoffeln mit lachs und dill –ar 3:4 –seed 9876789

The images are already quite different, and what particularly stands out to me: While in the English ones the potato dominates or is at least visible in every image, in the German dish we have a pretty dominant salmon. Potatoes are sometimes nowhere to be found.

Let's try another dish, this time a classic: pasta.

farfalle with mushrooms, cream and basil vs. farfalle mit pilzen, sahne und Basilikum

Prompt 1: farfalle with mushrooms, cream and basil –ar 3:4 –seed 9876789

Prompt 2: farfalle mit pilzen, sahne und Basilikum –ar 3:4 –seed 9876789

I find the result super exciting: With the English prompt, the farfalle are correctly depicted as pasta for our context.

With the German prompt, however, they become butterflies and very fantastic structures. The butterflies can be easily explained: Farfalle means butterfly in Italian. So with the German prompt, Midjourney doesn't access the same training data for this term as with the English one.

This in turn means that Midjourney does indeed incorporate the remaining context for interpreting prompts: With a prompt in English, farfalle are depicted as pasta – with a prompt in German, the Italian meaning (butterfly) is used.

5. Conclusion

Midjourney doesn't translate German prompts (just like most other AI image generators) into English to then generate an image, but has separate training data for each language that is applied accordingly. Basically, you can say that Midjourney doesn't differentiate between languages at all, but simply has specific stored files for each combination of letters.

However, multiple sets of data can be stored for a term, which are retrieved in certain contexts (example: Farfalle).

For English words, there are generally significantly more stored files, as this is where the developers focus. For words from other languages, there are usually fewer files, although it can be roughly said that frequently used nouns (horse, forest, man, woman, sun, etc.) and words often used to describe images (colors, perspectives, styles) often have more data stored. So the images turn out similarly well regardless of which language you use.

Midjourney generally has great difficulties with relational words: on, under, next to, at, in front of, behind, etc. This is already a challenge in English; in German, you notice even faster how you hit the limits.

And food images with German prompts also go in rather strange directions.